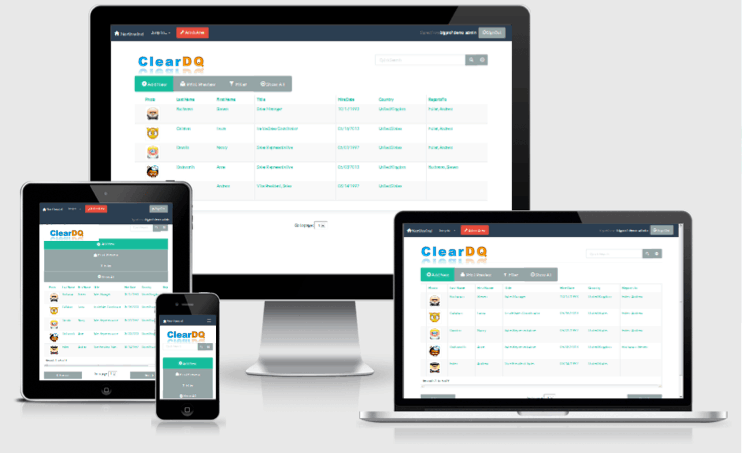

This is the workbench you need.

Many organizations (and even regulators) have limited, distrorted or no common data language to describe their commercial, industry or participaant landscape, or that language is in direct conflict/contradiction to globally understood industry vocabulary.

Many organizations have only crude (if any) frameworks that link data assets and data capabilities into a commercially engineered and value governed data eco-system. This results in poor data ownership, data investment decision making and inability to commercially prioritize data investments.

Many organizations have little or no ability to correctly identify (and classify) and uniquely name data assets or data capabilities, whether on the balancesheet or not. This impedes valuation (at any speed).

Many organizations struggle with little or no language or understanding of which economic metrics actually matter, when refining the commercial value of data assets and data capabilities. Too often Analytics (value ouput) operate over partial or incomplete data foundations, which create value destruction. The true economic value of many Data Analytics efforts is often lost before commencement when viewed across the "complete" Data Landscape, not just in isolation. In some cases Data Management is so bad, it is slowly commercially bankrupting organizations.

Many organizations have rudimentary, immature or no cogent method to intelligently invest in their data assets and data capabilties to maximise value creation and minimise/prevent value destruction. Typically, most organizations will invest enthusiastically in piecemeal value creation, like Data Analytics, with little or no economic view of all Data Capabilities across the "complete" Data Landscape. This results in a false economy and the artificial success of data initiatives.

Many organizations have limited, temporary or no funding model to build and sustain critical core data foundations. Data Foundations are required by all data assets and capabiities to be technically, legally and commercially controlled. Failure to fund Data Foundations usually results in excessive and duplicate shadow data costs paid throughout an organization, in unlinked or unrelated spending siloes. This also results in unsustainable Data Management and chronic/uncontrollable Data Quality problems with no relief possible, in fact, Data Quality progressively gets worse.

Many organizations have great difficulty managing or cannot manage the direct link between business processes (whether those processes are automated or human powered) and all the data assets or data capabilties those processes consume (or generate). This results in Change Management that, regardless of appearance, is uncontrollable to make improvements over Data Assets. This link needs to be understood, and is rarely taught, and rarely spoken of, if ever, at Data conferences and data training.

Many organisations have great difficulty managing the link between human access (people) and which Data Assets they access, for what business "value generating" purpose. Humans often require data acess via tools or reports to datasets. Again the linkage between humans and these data assets are rarely (if ever) managed well. If this linkage is missing and unmanaged, the result is spiralling duplication of datasets in CSV or XLS formats... in what we describe as literally a "Spreadsheet Hell".

Many organizations duplicate data work and knowledge work over the top of the same or similar Data Assets. Centralized Data Support teams more often than not, give way to decentralized shadow data and knowledge teams, with the link between Data Assets and Knowledge Work is poorly understood or lost completely. Again this results in the acccellerated duplication of datasets in CSV or XLS formats... in what we describe as literally a "Spreadsheet Hell". Also, chronic and uncontrollable Data Governance failures.

Since 2011, in the USA alone, data asset impairment (under IAS36 or not) costs that economy a shameful $3.1T. (*,1,2,3)

The software and data industry are not even embarassed, and largely illiterate to the magnitude and root causes of this pandemic.

Whilst this number remains a widely understood fact, the lack of response has been deafening, and traditional Data Quality approaches over the last 10 years are not keeping pace with the scale of this problem.

(*) Multiple Sources:

(1) "Bad Data Costs the U.S. $3 Trillion Per Year" by Tom Redman, Harvard Business Review

(2) “$3 Trillion Problem: Three Best Practices for Today’s Dirty Data Pandemic" by Hollis Tibbets

(3) IBM "Big Data Quality: Data Quality Today vs. 10 Years Ago" by Sonia Mezzetta

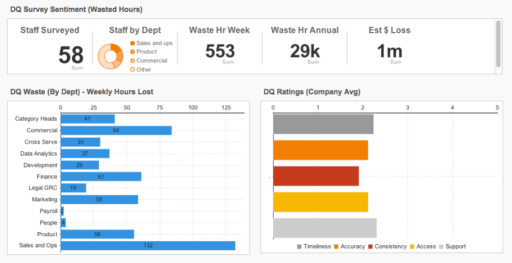

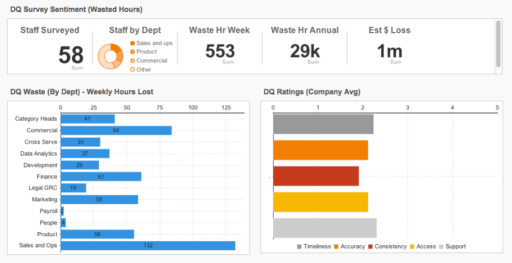

Do a quick calculation.

As just one mimimum value item of many, ClearDQ consistently identifies, measures and shows the root causes of an unlockable 20% (on average) or your Knowledge Workforce.

For example, for one famous organization, this is a $700m per annum opportunity in recoverable value.

Now thats data value we think worth re-capturing, and someone in that organization (Board, Shareholders, Regulator, Business Leaders) wants to reclaim that kind of data value, quickly, accurately and efficiently.

Once the evidence for this value opportunity is surfaced with the root causes, someone wants that value put back on the table.

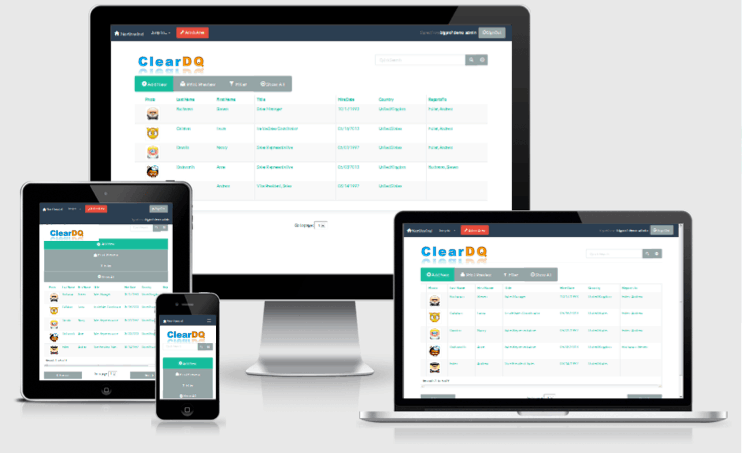

The ClearDQ software and methodology can be downloaded for free from GITHUB and executed without our help.

Our Education and Advisory services can be purchased as and when you need.

Alternatively, you can purchase the ClearDQ suite for your entire company, hosted by us as-a-service for $50 per annum per user, with added extras thrown in.

The average payback on ClearDQ is $20,000 per employee.

We typically take 2-3 weeks to take initial measures of organisations of any size in any location (and in any language)

We recommend running a pilot across a smaller sample group of business units to build your language, confidence and competency in this new skill, and it helps make our algorithms more precise.

If you don't like our software approach, you can still learn our method and build your own software. We can teach you, help you and support you in whatever capacity you need to succeed.

These are businesses and business models that are birthed from "Day 1" in the new digital economy.

They usually are not encumbered with (if any) legacy systems and data baggage to integrate, replace or decommission.

They typically offer just one single vertical product or offering, making their webscale ambitions easier and faster to achieve.

ClearDQ can help designers, investors, technologists and data leaders in these "New Economy" businesses be more targeted with the data investments they make.

Traditional markets that are data-intensive or information-intensive are Banking, Insurance, Energy Retail, Healthcare Administration, Government Services, Publishing, Broadcasting, Airlines, Mining, FMCG, all forms of Retail, Transport, Advertising to name a few.

The long-standing product(s), service(s) and value proposition for many businesses in these sectors face fierce disruption from digital competitors in some (or all) areas of their business model.

Data is a significanticant value creation (or destruction) lever in for these organisations, and many struggle with finding their way forward with data investments without the economic signals that ClearDQ can provide.

Data Leaders - includes roles like the Chief Data Officer (CDO), Chief Data and Analytics Officer (CDAO), Head of Data Analytics, Head of Data, VP of Data, Head of Information Architecture, etc.

These roles are under pressure to govern data assets and capabilities in a way that create maximum commercial value from data, at minimal cost, whilst mitigating data risks.

ClearDQ provides Data Leaders with the right economic signals around their data assets, data products and data capabilities.

Technology Leaders - includes roles like the Chief Operating Officer (COO), Chief Marketing Officer (CMO), VP of Sales, VP of Marketing, VP of Shared Services, Business Support Manager, etc.

These roles are under pressure to govern business processes and information in a way that maximise efficiency and innovate around productivity, and customer experience.

ClearDQ provides Operational Performance Leaders with the right economic signals around the business processes that produce and consume fast and accurate operational data into all operational processes.

Technology Leaders - includes roles like the Chief Information Officer (CIO), Chief Technology Officer (CTO), Data Center Manager, Cloud Services Manager, Application Services Manager, Application Development Manager, Technology Support Manager, etc.

These roles are under pressure to govern technology assets and decision making (that hold, secure and provision raw data, data platforms and data software) in a manner that transforms data-technologies to become more inexpensive, reliable, scalable and performant.

ClearDQ provides Technology Leaders with the right economic signals around their data software, data platforms and data tools that add the most economic value.

Finance Leaders - includes roles like the Chief Financial Officer (CFO), etc.

These roles are under pressure to govern all business assets (data included) in a way that maximise innovation and financial returns.

ClearDQ provides Finance Leaders with the right economic signals so that better investment decisions can be made regarding Data Assets, Data Products and Data Capabilities.

VC & Investment Leaders - includes roles like the Investment Manager, Asset Manager, Portfolio Manager, etc.

These roles are under pressure to seek new and exciting investments (some that will include vast troves of data assets), and ensure existing investments are performing well during the entire invest, acquire and exit lifecycle.

ClearDQ provides VC & Investor Leaders with the right economic signals around Data Assets to guide M&A discussions or help distinguish greater data risks or data value gaps between investments.

These roles are increasingly under pressure to deliver accurate, credible and explanable valuations of organization entities that may hold significant Data Assets.

ClearDQ provides Business Valuation Leaders with the right economic signals around the Data Assets to guide exit sales, M&A discussions or simply help price Data Assets, Data Products and Data Capabilities for refinancing or sale.

These roles are increasingly under pressure to deliver language, education, guidance and frameworks to participants in their respective industry's (Banking, Insurance, Energy, etc)

ClearDQ provides Regulatory Leaders with the extended industry-wide economic language and signals around the Data Assets to guide their assistance to industry participants.

The tooling, and the methodology (methodology in particular) of ClearDQ has been described in many ways, but Data and Infonomics experts are genuinely impressed and complimentary.

High praise. We love it.

ClearDQ blends several proven, longstanding and mature data methodologies, and extends those with the deep Infonomics/Monetization thinking (not previously conceived or well understood untill now).

In particular, ClearDQ approaches the problem of "bad" data from the unique perspective of "Outside-in", first documented by Jack Olsen in 2003 in his book called “Data Quality: The Accuracy Dimension”.

The data industry has never seriously picked up on this subtle and counter-intuitive gem.

ClearDQ takes Jack Olsen's approach more seriously than most. The "Outside-In" approach was matured in the Business Process industry first, but never seriously adapted into the data industry.

In our extensive experience, this Outside-In method consistently delivers a faster, more accurate commercial view of Data Asset, Data Product and Data Capability dynamics than any "Inside-out" approach.

One of the clever attributes of ClearDQ is it's ability to flexibly consume more and more metadata, and correlate more economic evidence.

ClearDQ learns.

Faster automation and smarter algorithms make your Infonomic journey one that is based on evidence, not guesswork.

This allows ClearDQ to be the central clearing house of Data Economics for an organization to base, or pivot Data Investment decisions around.